We’ve been having loads of meaningless search engine indexing traffic caused by various web crawlers from around the world, resulting in unnecessary load on the production servers in my current project; meaningless in the sense that the site is mainly in swedish and hence rather uninteresting for people living in for instance Russia, Ukraine or Kazakhstan, where these engines are popular. A simple way to deal with unwanted search engine traffic is of course the robots.txt file; however, it is not certain that all engines will respect this, so having the Internet Information Services (IIS) blocking requests from specific user agents is a safer approach.

Adding request filtering in IIS 7.5 GUI

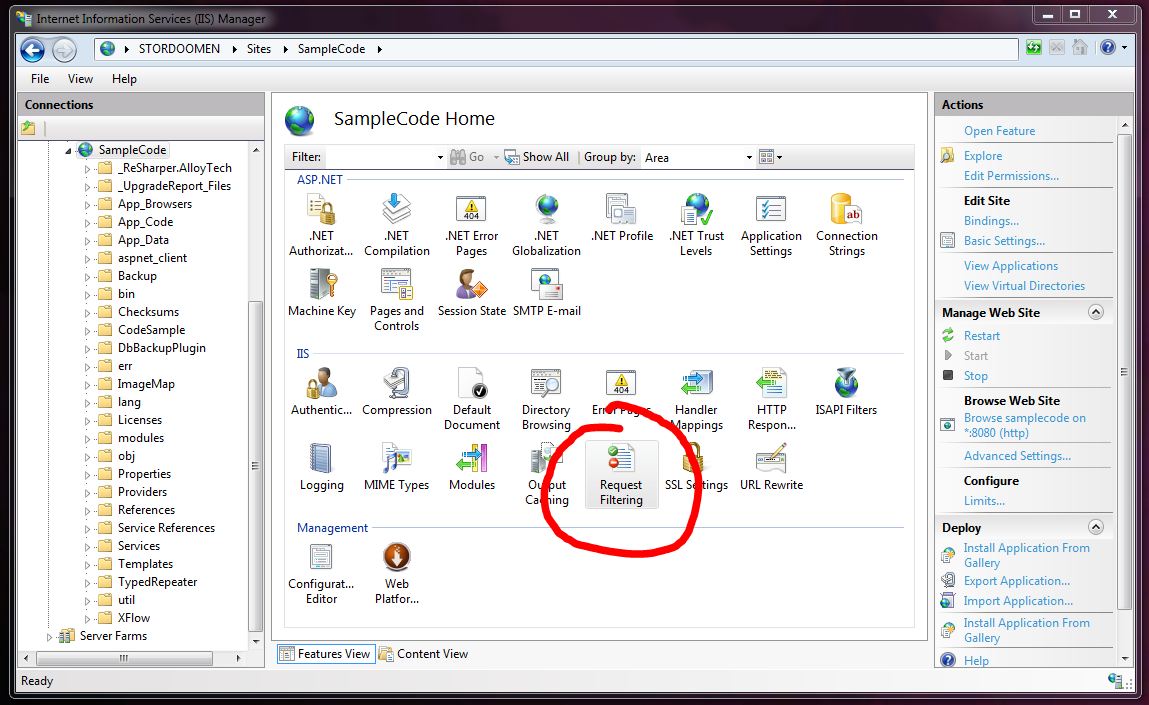

Filtering your requests can be done quite easily through the IIS interface. Go to the site that you want to filter for and bring up the Features View. Here you will find an icon called Request Filtering in the IIS area.

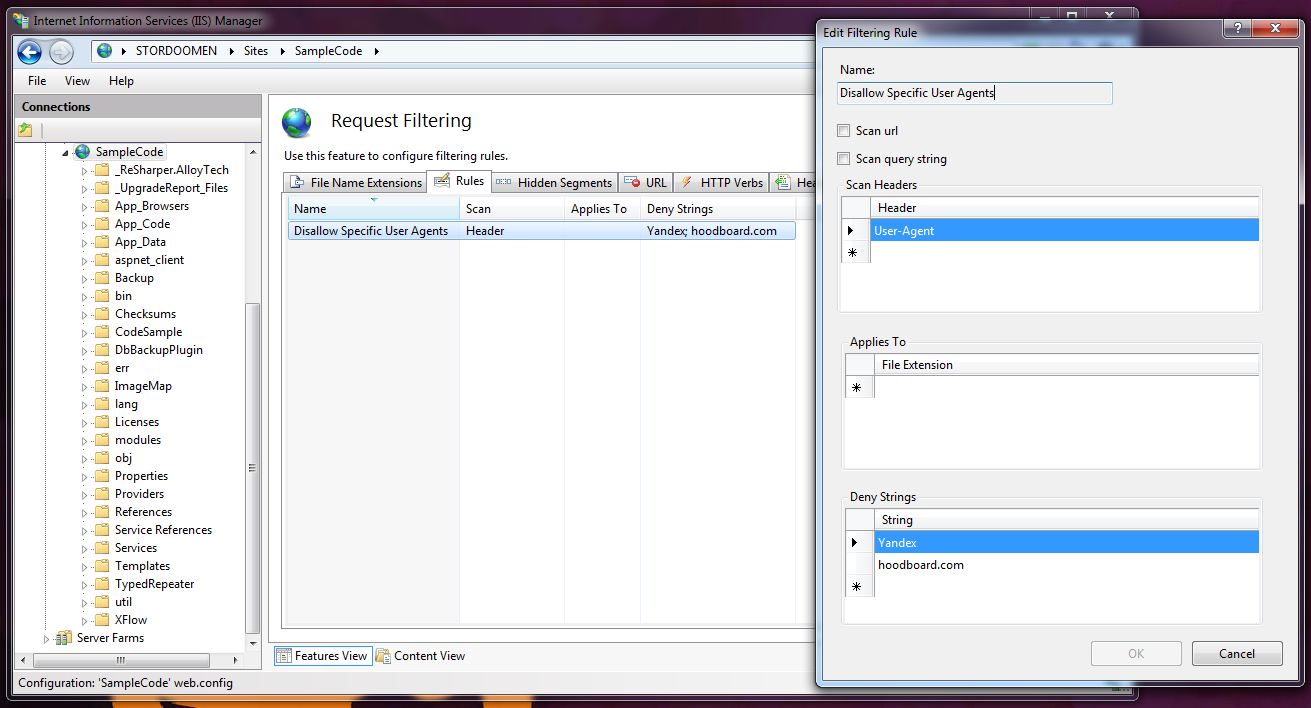

Opening this feature allows you to for instance add rules that will govern your filtering. Below is one blocking all requests from the user agents Yandex and hoodboard.com.

You may also access the Request Filtering feature if you select the top node, Home, in the Connections pane; adding rules here will make them propagate down to all the sites on your IIS.

Adding request filtering rules through web.config

Having the IIS filter requests for your site is something that can easily be configured in your web.config file as well. Under the system.webServer security section it is possible to add a requestFiltering node in which you may create all the filtering rules that you will need. Below is one matching the rule that we previously created through the IIS GUI.

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<system.webServer>

<security>

<requestFiltering>

<filteringRules>

<filteringRule name="Disallow Specific User Agents"

scanUrl="false"

scanQueryString="false">

<scanHeaders>

<add requestHeader="User-Agent" />

</scanHeaders>

<denyStrings>

<add string="Yandex" />

<add string="hoodboard.com" />

</denyStrings>

</filteringRule>

</filteringRules>

</requestFiltering>

</security>

</system.webServer>

IIS 7.5 giving 500.19 error for duplicate request filtering rules

Adding a request filtering rule to a website through the IIS 7.5 interface will make the IIS update your site’s web.config file in the same manner as above. Should you on the other hand add your filtering rule to the top Home node instead, the IIS would put this in a different configuration file; applicationHost.config. This file is found deep down in your Windows directory on your system drive; see the %WinDir%\System32\Inetsrv\Config\ directory. Now this is not a configuration file where your average web developer usually would go poking around, but suppose that a filtering rule such as the one above has been added to the Home node through the IIS 7.5 interface in order to try the filtering feature out. Furthermore, if you are working on a website that uses some sort of XML mass updating to build the configuration files for your various environments, your site root’s web.config will likely be overwritten each time you build your site. This results in adding filtering rules to a site only through the IIS interface will not work particularly well as they won’t stick; you would have to add it to either a web.master.config or one of the ones describing a certain environment. All in all, there is a possibility here that you might find yourself with both a rule in the far away applicationHost.config, and one with the same name in your website’s web.config; if you try surfing to your site at this point, all you will get is a rather useless standard IIS error screen.

u_ex130221.log

#Software: Microsoft Internet Information Services 7.5 #Version: 1.0 #Date: 2013-02-21 22:49:32 #Fields: date time s-ip cs-method cs-uri-stem cs-uri-query s-port cs-username c-ip cs(User-Agent) sc-status sc-substatus sc-win32-status time-taken 2013-02-21 22:49:32 127.0.0.1 GET / - 80 - 127.0.0.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) 500 19 183 39809 2013-02-21 22:49:56 127.0.0.1 GET / - 80 - 127.0.0.1 Mozilla/5.0+(compatible;+MSIE+9.0;+Windows+NT+6.1;+WOW64;+Trident/5.0) 500 19 183 0

Checking the site’s IIS logs will at least give us a little more to work with. As you can see above, we will get the error code substatus (19) towards the end of the line. Checking the list of The HTTP status code in IIS 7.0, IIS 7.5, and IIS 8.0 over at Microsoft Support will show us that 500.19 is caused by some configuration data being invalid. In our case, this is of course due to the two filtering rules having the same name.

If you would like to alter an inherited filtering rule for one of your sites, you could first remove the old one (7), and then add it again but with the new conditions (14-16).

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<system.webServer>

<security>

<requestFiltering>

<filteringRules>

<remove name="Disallow Specific User Agents" />

<filteringRule name="Disallow Specific User Agents"

scanUrl="false"

scanQueryString="false">

<scanHeaders>

<add requestHeader="User-Agent" />

</scanHeaders>

<denyStrings>

<add string="Yandex" />

</denyStrings>

</filteringRule>

</filteringRules>

</requestFiltering>

</security>

</system.webServer>

Using a <clear /> instead of the remove tag on line 7 will of course also work, as it clears all of the inherited filtering rules from the website in question.